Emerging research suggests that GPT-4 may have a role in enhancing accuracy and streamlining reviews of radiology reports.

For the retrospective study, recently published in Radiology, researchers compared the performance of GPT-4 (OpenAI) to the performance of six radiologists of varying experience to detect errors (ranging from inappropriate wording and spelling mistakes to side confusion) in 200 radiology reports. The study authors noted that 150 errors were deliberately added to 100 of the reports being reviewed.

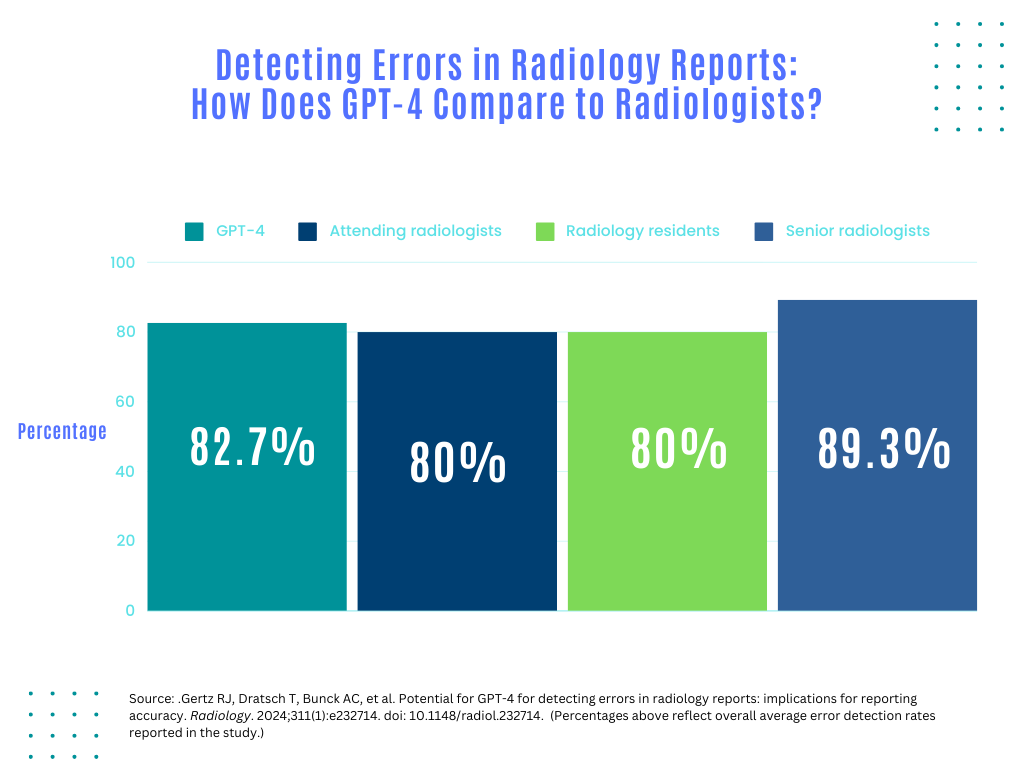

The study authors found that the overall average error detection rate for GPT-4 reviews of radiology reports (82.7 percent) was comparable to those of attending radiologists (80 percent) and radiology residents (80 percent) but lower than the average for two reviewing senior radiologists (89.3 percent).

For radiology reports specific to radiography, GPT-4 had an average error detection rate (85 percent) that was comparable to senior radiologists (86 percent), and significantly higher than that of attending radiologists (78 percent) and radiology residents (77 percent).

“Our results suggest that large language models (LLMs) may perform the task of radiology report proofreading with a level of proficiency comparable to that of most human readers. (As) errors in radiology reports are likely to occur across all experience levels, our findings may be reflective of typical clinical environments. This underscores the potential of artificial intelligence to improve radiology workflows beyond image interpretation,” wrote lead study author Roman J. Gertz, M.D., a resident in the Department of Radiology at University Hospital of Cologne in Cologne, Germany, and colleagues.

The researchers noted that GPT-4 was significantly faster in reviewing radiology reports than radiologists. In comparison to the mean reading times for senior radiologists (51.1 seconds), attending radiologists (43.2 seconds) and radiology residents (66.6 seconds), the study authors found the mean reading time for GPT-4 was 3.5 seconds. They also noted a total reading time of 19 minutes for GPT-4 review of 200 radiology reports versus 1.4 hours for the fastest reviewing radiologist.

“GPT-4 demonstrated a shorter processing time than any human reader, and the mean reading time per report for GPT-4 was faster than the fastest radiologist in the study … ,” noted Gertz and colleagues.

Three Key Takeaways

- Efficiency boost. GPT-4 demonstrates significantly faster reading times compared to human radiologists, highlighting its potential to streamline radiology report reviews and improve workflow efficiency. Its mean reading time per report was notably faster than even the quickest radiologist in the study, suggesting substantial time-saving benefits.

- Comparable error detection. GPT-4 exhibits a comparable rate of error detection to attending radiologists and radiology residents for overall errors in radiology reports. This suggests that large language models (LLMs) like GPT-4 can perform the task of radiology report proofreading with a level of proficiency comparable to experienced human readers, potentially reducing the burden on radiologists.

- Varied error detection proficiency: While GPT-4 performs well in detecting errors of omission, it shows lower proficiency in identifying cases of side confusion compared to senior radiologists and radiology residents. Understanding these nuances in error detection may help optimize the integration of AI tools like GPT-4 into radiology workflows.

While GPT-4 had a comparable rate of error detection to senior radiologists and superior rates of error detection to radiology residents and attendings for errors of omission, the researchers noted that GPT-4 had significantly lower average detection in cases of side confusion (78 percent) in comparison to senior radiologists (91 percent) and radiology residents (89 percent).

For radiology reports involving computed tomography (CT) or magnetic resonance imaging (MRI), the study authors found that GPT-4 had a comparable average detection rate (81 percent) to attending radiologists (81 percent) and radiology residents (82 percent), but 11 percent lower than that of senior radiologists (92 percent).

In an accompanying editorial, Howard P. Forman, M.D., M.B.A., noted that exploring the utility of assistive technologies is essential in order to devote more focus to practicing at the “top of our license” in an environment of declining reimbursement and escalating imaging volume. However, Dr. Forman cautioned against over-reliance on LLMs.

“After review by the imperfect LLM, will the radiologist spend the same time proofreading? Or will that radiologist be comforted in knowing that most errors had already been found and do a more cursory review? Could this inadvertently lead to worse reporting?” questioned Dr. Forman, a professor of radiology, economics, public health, and management at Yale University.

(Editor’s note: For related content, see “Can ChatGPT and Bard Bolster Decision-Making for Cancer Screening in Radiology?,” “Can ChatGPT be an Effective Patient Communication Tool in Radiology?” and “Can ChatGPT Pass a Radiology Board Exam?”)

In regard to study limitations, the study authors conceded that the deliberately introduced errors do not reflect the variety of errors that may be found in radiology reports. They also suggested that the experimental nature of the study may have contributed to a higher error detection rate due to a Hawthorne effect in which the study participants altered their reviews of radiology reports given that they were being observed. The researchers acknowledged their assessment of possible cost savings with utilizing GPT-4 to review radiology reports doesn’t reflect the costs of integrating a large language model into existing radiology workflows.